Cross-species pose estimation plays a vital role in studying neural mechanisms and behavioral patterns while serving as a fundamental tool for behavior monitoring and prediction. However, conventional image-based approaches face substantial limitations, including excessive storage requirements, high transmission bandwidth demands, and massive computational costs. To address these challenges, we introduce an image-free pose estimation framework based on single-pixel cameras operating at ultra-low sampling rates (6.260 × 10-4). Our method eliminates the need for explicit or implicit image reconstruction, instead directly extracting pose information from highly compressed single-pixel measurements. It dramatically reduces data storage and transmission requirements while maintaining accuracy comparable to traditional image-based methods. Our solution provides a practical approach for real-world applications where bandwidths and computational resources are constrained.

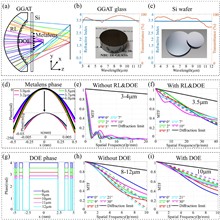

The integration of mid-wave infrared (MWIR) and long-wave infrared (LWIR) imaging into a compact high-performance system remains a significant challenge in infrared optics. In this work, we present a dual-band infrared imaging system based on hybrid refractive-diffractive-metasurface optics. The system integrates a silicon-based metalens for the MWIR channel and a hybrid refractive-diffractive lens made of high-refractive-index chalcogenide glass for the LWIR channel. It achieves a compact total track length (TTL) of 11.31 mm. The MWIR channel features a 1.0 mm entrance pupil diameter, a 10° field of view (FOV), and achromatic imaging across the 3–4 µm spectral range with a focal length of 1.5 mm. The LWIR channel provides an 8.7 mm entrance pupil diameter, a 30° FOV, and broadband achromatic correction over the 8–12 µm spectral range with a focal length of 13 mm. To further enhance spatial resolution and recover fine image details, we employ low-rank adaptation (LoRA) fine-tuning within a physics-informed StableSR framework. This hybrid optical approach establishes, to our knowledge, a new paradigm in dual-band imaging systems by leveraging the complementary advantages of metalens dispersion engineering, diffractive phase modulation, and conventional refractive optics, delivering a lightweight, multispectral imaging solution with superior spectral discrimination and system compactness.

Non-line-of-sight (NLOS) imaging enables the detection and reconstruction of hidden objects around corners, offering promising applications in autonomous driving, remote sensing, and medical diagnosis. However, existing steady-state NLOS imaging methods face challenges in achieving high efficiency and precision due to the need for multiple diffuse reflections and incomplete Fourier amplitude sampling. This study proposes, to our knowledge, a novel steady-state NLOS imaging technique via polarization differential correlography (PDC-NLOS). By employing the polarization difference of the laser speckle, the method designs a single-shot polarized speckle illumination strategy. The fast and stable real-time encoding for hidden objects ensures stable imaging quality of the PDC-NLOS system. The proposed method demonstrates millimeter-level imaging resolution when imaging horizontally and vertically striped objects.

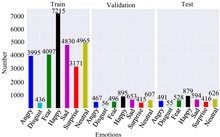

Deep learning-assisted facial expression recognition has been extensively investigated in sentiment analysis, human-computer interaction, and security surveillance. Generally, the recognition accuracy in previous reports requires high-quality images and powerful computational resources. In this work, we quantitatively investigate the impacts of frequency-domain filtering on spatial-domain facial expression recognition. Based on the Fer2013 dataset, we filter out 82.64% of high-frequency components, resulting in a decrease of 3.85% in recognition accuracy. Our findings well demonstrate the essential role of low-frequency components in facial expression recognition, which helps reduce the reliance on high-resolution images and improve the efficiency of neural networks.

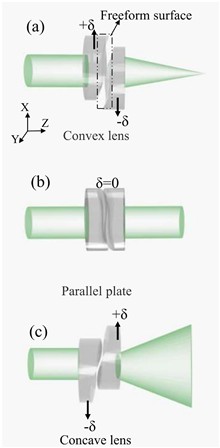

This study proposes compact Alvarez varifocal lenses with a wide varifocal range, which consist of a set of Alvarez lenses and three sets of ordinary lenses. The Alvarez lenses have a double freeform surface and are driven by a cam-driven structure. The axial size of the proposed varifocal Alvarez lenses is only 30.50 mm. The experimental results show that the proposed varifocal lens can achieve a focal length range from 15 to 75 mm, and the imaging quality is still in an acceptable range for optical lens requirements. The compact varifocal Alvarez lenses are expected to be used in surveillance systems, industrial inspection, and machine vision.

Photon-level single-pixel imaging overcomes the reliance of traditional imaging techniques on large-scale array detectors, offering the advantages such as high sensitivity, high resolution, and efficient photon utilization. In this paper, we propose a photon-level dynamic feature single-pixel imaging method, leveraging the frequency domain sparsity of the object’s dynamic features to construct a compressed measurement system through discrete random photon detection. In the experiments, we successfully captured 167 and 200 Hz featured frequencies and achieved high-quality image reconstruction with a data compression ratio of 20%. Our approach introduces a new detection dimension, significantly expanding the applications of photon-level single-pixel imaging in practical scenarios.

The integration of deep learning into computational imaging has driven substantial advancements in coherent diffraction imaging (CDI). While physics-driven neural networks have emerged as a promising approach through their unsupervised learning paradigm, their practical implementation faces critical challenges: measurement uncertainties in physical parameters (e.g., the propagation distance and the size of sample area) severely degrade reconstruction quality. To overcome this limitation, we propose a deep-learning-enabled spatial sample interval optimization framework that synergizes physical models with neural network adaptability. Our method embeds spatial sample intervals as trainable parameters within a PhysenNet architecture coupled with Fresnel diffraction physics, enabling simultaneous image reconstruction and system parameter calibration. Experimental validation demonstrates robust performance with structural similarity (SSIM) values consistently maintained at 0.6 across diffraction distances spanning of 10–200 mm, using a 1024 × 1024 region of interest (ROI) from a 1624 × 1440 CCD (pixel size: 4.5 μm) under 632.8 nm illumination. This framework has excellent fault tolerance, that is, it can still maintain high-quality image restoration even when the propagation distance measurement error is large. Compared to conventional iterative reconstruction algorithms, this approach can transform fixed parameters into learnable parameters, making almost all image restoration experiments easier to implement, enhancing system robustness against experimental uncertainties. This work establishes, to our knowledge, a new paradigm for adaptive diffraction imaging systems capable of operating in complex real scenarios.

Terahertz (THz) imaging based on the Rydberg atom achieves high sensitivity and frame rates but faces challenges in spatial resolution due to diffraction, interference, and background noise. This study introduces a polarization filter and a deep learning-based method using a physically informed convolutional neural network to enhance resolution without pre-trained datasets. The technique reduces diffraction artifacts and achieves lens-free imaging with a resolution exceeding 1.25 lp/mm over a wide field of view. This advancement significantly improves the imaging quality of the Rydberg atom-based sensor, expanding its potential applications in THz imaging.

The relative motion between an imaging system and its target usually leads to image blurring. We propose a motion deblurring imaging system based on the Fourier-transform ghost diffraction (FGD) technique, which can overcome the spatial resolution degradation caused by both laterally and axially translational motion of the target. Both the analytical and experimental results demonstrate that when the effective transmission aperture of the receiving lens is larger than the target’s lateral motion amplitude and even if the target is located in the near-field region of the source, the amplitude and mode of the target’s motion have no effect on the quality of FGD, and high-resolution imaging in the spatial domain can be always achieved by the phase-retrieval method from the FGD patterns. Corresponding results based on the conventional Fourier diffraction system are also compared and discussed.

Non-line-of-sight (NLOS) imaging has potential in autonomous driving, robotic vision, and medical imaging, but it is hindered by extensive scans. In this work, we provide a time-multiplexing NLOS imaging scheme that is designed to reduce the number of scans on the relay surface. The approach introduces a time delay at the transmitting end, allowing two laser pulses with different delays to be sent per period and enabling simultaneous acquisition of data from multiple sampling points. Additionally, proof-of-concept experiments validate the feasibility of this approach, achieving reconstruction with half the scans. These results demonstrate a promising strategy for real-time NLOS imaging.

Single-molecule localization microscopy (SMLM) has pushed resolution to sub-40 nm. Combined with structured illumination, lateral resolution can be doubled or the axial resolution can be improved fourfold. However, current techniques are challenging in balancing the lateral and axial resolutions. Here, we report a new modulated illumination single-molecule localization modality, isoFLUX. Utilizing two objective lenses to form interference patterns along the x–z and y–z directions, the lateral and axial resolutions can be improved simultaneously. Compared to SMLM, isoFLUX maintains a twofold average enhancement in both lateral and axial resolutions under an astigmatic point spread function (PSF), 1.5-fold in the lateral resolution and 2.5-fold in the axial resolution under the saddle-point PSF.

Conventional methods for near-field characterization have typically relied on the nanoprobe to point-scan the field, rendering the measurements vulnerable to external environmental influences. Here, we study the direct far-field imaging of the near-field polarizations based on the four-wave mixing effect. We construct a simulation model to realize the instantaneous extraction of the near-field distributions of a wide range of structured light fields, such as cylindrical vector vortex beams, plasmonic Weber beams, and topological spin textures, including photonic skyrmions and merons. This method is valuable for the studies on manipulation of structured light fields and light–matter interaction at the micro/nano scales.

Hyperspectral-depth imaging is of great importance in many fields. However, it is difficult for most systems to achieve good spectral resolution and accurate location at the same time. Here, we present a hyperspectral-depth single-pixel imaging system that exploits the two reflection beam paths of a spatial light modulator to provide one-dimensional depth and spectral information of the object; then, they are combined with the modulation patterns and compressed-sensing algorithm to construct the hyperspectral-depth image, even at a sampling ratio of 25%. In our experiments, a spectral resolution of 1.2 nm in the range of 420 to 780 nm is achieved, with a depth measurement error of less than 1 cm. Our work thus provides a new way for hyperspectral-depth imaging.

With the rapid advancement of three-dimensional (3D) scanners and 3D point cloud acquisition technology, the application of 3D point clouds has been increasingly expanding in various fields. However, due to the limitations of 3D sensors, the collected point clouds are often sparse and non-uniform. In this work, we introduce local tactile information into the point cloud super-resolution task to aid in enhancing the resolution of the point cloud using fine-grained local details. Specifically, the local tactile point cloud is denser and more accurate compared to the low-resolution point cloud. By leveraging tactile information, we can obtain better local features. Therefore, we propose a feature extraction module that can efficiently fuse visual information with dense local tactile information. This module leverages the features from both modalities to achieve improved super-resolution results. In addition, we introduce a point cloud super-resolution dataset that includes tactile information. Qualitative and quantitative experiments show that our work performs much better than existing similar works that do not include tactile information, both in terms of handling low-resolution inputs and revealing high-fidelity details.

Ghost imaging has shown promise for tracking the moving target, but the requirement for adequate measurements per motion frame and the image-dependent tracking process limit its applications. Here, we propose a background removal differential model based on asymptotic gradient patterns to capture the displacement of a translational target directly from single-pixel measurements. By staring at the located imaging region, we evenly distribute optimally ordered Hadamard basis patterns to each frame; thus, the image can be recovered from fewer intraframe measurements with gradually improved quality. This makes ghost tracking and imaging more suitable for practical applications.

Single-pixel imaging (SPI) is a computational imaging technique that is able to reconstruct high-resolution images using a single-pixel detector. However, most SPI demonstrations have been mainly focused on macroscopic scenes, so their applications to biological specimens are generally limited by constraints in space-bandwidth-time product and spatial resolution. In this work, we further enhance SPI’s imaging capabilities for biological specimens by developing a high-resolution holographic system based on heterodyne holography. Our SPI system achieves a space-bandwidth-time product of 41,667 pixel/s and a lateral resolution of 4–5 μm, which represent state-of-the-art technical indices among reported SPI systems. Importantly, our SPI system enables detailed amplitude imaging with high contrast for stained specimens such as epithelial and esophageal cancer samples, while providing complementary phase imaging for unstained specimens including molecular diagnostic samples and mouse brain tissue slices, revealing subtle refractive index variations. These results highlight SPI’s versatility and establish its potential as a powerful tool for advanced biomedical imaging applications.

The reflection matrix optical coherence tomography (RM-OCT) method has made significant progress in extending imaging depth within scattering media. However, the current method of measuring the reflection matrix (RM) through uniform sampling results in relatively long data collection time. This study demonstrates that the RM in scattering media exhibits sparsity. Consequently, a compressed sensing (CS)-based technique for measuring the RM is proposed and applied to RM-OCT images. Experimental results show that this method requires less than 50% of the traditional sampling data to recover target information within scattering media, thereby significantly reducing data acquisition time. These findings not only expand the theory of the RM but also provide a more efficient measurement approach. This advancement opens up broader applications for CS techniques in RM-OCT and holds great potential for improving imaging efficiency in scattering media.

In this Letter, a coding aperture design framework is introduced for data sampling of a CUP-VISAR system in laser inertial confinement fusion (ICF) research. It enhances shock wave velocity fringe reconstruction through feature fusion with a convolutional variational auto-encoder (CVAE) network. Simulation and experimental results indicate that, compared to random coding aperture, the proposed coding matrices exhibit superior reconstruction quality, achieving more accurate fringe pattern reconstruction and resolving coding information aliasing. In the experiments, the system signal-to-noise ratio (SNR) and reconstruction quality can be improved by increasing the light transmittance of the encoding matrix. This framework aids in diagnosing ICF in challenging experimental settings.

Imaging through scattering media remains a formidable challenge in optical imaging. Exploiting the memory effect presents new opportunities for non-invasive imaging through the scattering medium by leveraging speckle correlations. Traditional speckle correlation imaging often utilizes a random phase as the initial phase, leading to challenges such as convergence to incorrect local minima and the inability to resolve ambiguities in object orientation. Here, a novel method for high-quality reconstruction of single-shot scattering imaging is proposed. By employing the initial phase obtained from bispectral analysis in the subsequent phase retrieval algorithm, the convergence and accuracy of the reconstruction process are notably improved. Furthermore, a robust search technique based on an image clarity evaluation function successfully determines the optimal reconstruction size. The study demonstrates that the proposed method can obtain high-quality reconstructed images compared with the existing scattering imaging approaches. This innovative approach to non-invasive single-shot imaging through strongly scattering media shows potential for applications in scenarios involving moving objects or dynamic scattering imaging scenes.

Existing two-step Fourier single-pixel imaging (FSPI) suffers from low noise-robustness, and three-step FSPI and four-step FSPI improve the noise-robustness but at the cost of more measurements. In this Letter, we propose a method to improve the noise-robustness of two-step FSPI without additional illumination patterns or measurements. In the proposed method, the measurements from base patterns are replaced by the average values of the measurement from two sets of phase-shift patterns. Thus, the imaging degradation caused by the noise in the measurements from base patterns can be avoided, and more reliable Fourier spectral coefficients are obtained. The imaging quality of the proposed robust two-step FSPI is similar to those of three-step FSPI and four-step FSPI. Simulations and experimental results validate the effectiveness of the proposed method.

Range-gated imaging has the advantages of long imaging distance, high signal-to-noise ratio, and good environmental adaptability. However, conventional range-gated imaging utilizes a single laser pulse illumination modality, which can only resolve a single depth of ranging in one shot. Three-dimensional (3D) imaging has to be obtained from multiple shots, which limits its real-time performance. Here, an approach of range-gated imaging using a specific double-pulse sequence is proposed to overcome this limitation. With the help of a calibrated double-pulse range-intensity profile, the depth of static targets can be calculated from the measurement of a single shot. Moreover, the double-pulse approach is beneficial for real-time depth estimation of dynamic targets. Experimental results indicate that, compared to the conventional approach, the depth of field and depth resolution are increased by 1.36 and 2.20 times, respectively. It is believed that the proposed double-pulse approach provides a potential new paradigm for range-gated 3D imaging.

Brain imaging techniques provide in vivo insight into structural and functional phenotypes that are physiologically and clinically relevant. However, most existing brain imaging techniques suffer from balancing trade-offs among the temporal and spatial resolutions as well as the field of view (FOV). Here, we proposed a high-resolution photoacoustic microscopy (PAM) system based on a transparent ultrasound transducer (TUT). The system not only retains the advantage of the fast imaging speed of pure optical scanning but also has an imaging FOV of up to 20 mm × 20 mm, which can easily enable rapid imaging of the whole mouse brain in vivo. Based on experimental validation of brain injury, glioma, and cerebral hemorrhage in mice, the system has the capability to visualize the vascular structure and hemodynamic changes in the cerebral cortex. TUT-based PAM provides an important research tool for rapid multi-parametric brain imaging in small animals, providing a solid foundation for the study of brain diseases.

Phase unwrapping is a crucial process in the field of optical measurement, and the effectiveness of unwrapping directly affects the accuracy of final results. This study proposes a multi-level grid method that can efficiently achieve phase unwrapping. First, the phase image of the package to be processed is divided into small grids, and each grid is unwrapped in multiple directions. Then, a level-by-level coarse-graining mesh method is employed to eliminate the new data “faults” generated from the previous level of grid processing. Finally, the true phase results are obtained by iterating to the coarsest grid through the unwrapping process. In order to verify the effectiveness and superiority of the proposed method, a numerical simulation is first applied. Further, three typical flow fields are selected for experiments, and the results are compared with flood-fill and multi-grid methods for accuracy and efficiency. The proposed method obtains true phase information in just 0.5 s; moreover, it offers more flexibility in threshold selection compared to the flood-fill and region-growing methods. In summary, the proposed method can solve the phase unwrapping problems for moiré fringes, which could provide possibilities for the intelligent development of moiré deflection tomography.

The gravimeter inclination is a significant parameter for cold atom gravimeters, and the counter-propagating Raman beams should be exactly parallel to the local vector of gravity. The tiltmeters, essential devices in cold atom gravimeters, are used to determine the optimum inclination of Raman beams and compensate for the inclination error. However, the conventional tiltmeters may lead to system errors in cold atom gravimeters due to insufficient nonlinearity and drift. In this study, we establish an optical interferometer inside the cold atom gravimeter by placing a hollow beam splitter plate in the path of the Raman beams. This optical interferometer acts as a tiltmeter to measure the inclination change of the Raman beams without influencing the gravity measurement. We prove that our optical tiltmeter (OT) works well with field assembly. Comparisons of our OT and commercial tiltmeters reveal that the nonlinearity of our OT is at least one-tenth of that of the commercial tiltmeters, and that the drift of our OT is at least 23 µrad less than that of the commercial tiltmeters over 90 h measurements. This can reduce the typical value of the atom gravimeter system error by 4 µGal. Further, a comparison of measured gravity to inclination deviation calibrated our OT and further validated that our OT outperforms commercial tiltmeters. This work enables more precise measurement of Raman beam angle variations and facilitates the calibration of installed tiltmeters, whether in the laboratory or the field.

Dual-comb hyperspectral digital holography (DC-HSDH) is an emerging technique employing two optical frequency combs with slightly different repetition frequencies for hyperspectral holographic imaging. Leveraging the unique capabilities of dual-comb interferometry (DCI), DC-HSDH enables the acquisition of phase maps and amplitude maps for multiple wavelengths in parallel, showcasing advantages in imaging speed and robustness. This study introduces a simplified DC-HSDH system based on spatial heterodyne DCI. It reduces the number of required optical devices in comparison to conventional DC-HSDH systems, leading to a streamlined system structure, reduced electric power consumption, and enhanced optical power efficiency. Additionally, it effectively improves the space-time bandwidth product by doubling the temporal bandwidth efficiency. Experiments were performed to validate the system. The proposed system successfully retrieved the three-dimensional profile of a stepped reflector without ambiguity, and the transmission spectrum of an absorbing gas was obtained simultaneously.

Scanning time-of-flight light detection and ranging (LiDAR) is the predominant technique for autonomous driving. Mainstream mechanical scanning is reliable but limited in speed and usually has blind areas. This work proposes a scanning method that uses an acousto-optic modulator to add high-frequency scanning on the slow axis of traditional automotive LiDAR linear scanning. It eliminated blind areas and enhanced the angular resolution to the size of the laser divergence angle. In experiments, this method increased the recognition probability of small targets at approximately 40 m from 70% to over 90%, providing an effective solution for future high-level autonomous driving.

Although single-pixel correlated imaging has the capability to capture images in complex environments, it still encounters challenges such as high computational complexity, limited imaging efficiency, and reduced imaging quality under low-light conditions. We innovatively propose a symmetrically related random phase-based correlated imaging method, which reduces the number of required random scattering media, enhances computational efficiency, and mitigates system noise interference. Single-pixel correlated imaging can be completed within 2 min using this approach. The experiments demonstrated that both the constructed dual-path thermal-optical correlated imaging system and the single-path computational correlated imaging system achieved high-quality imaging even under low-light conditions.

Structured illumination microscopy (SIM) is a pivotal technique for dynamic subcellular imaging in live cells. Conventional SIM reconstruction algorithms depend on accurately estimating the illumination pattern and can introduce artifacts when this estimation is imprecise. Although recent deep-learning-based SIM reconstruction methods have improved speed, accuracy, and robustness, they often struggle with out-of-distribution data. To address this limitation, we propose an awareness-of-light-field SIM (AL-SIM) reconstruction approach that directly estimates the actual light field to correct for errors arising from data distribution shifts. Through comprehensive experiments on both simulated filament structures and live BSC1 cells, our method demonstrates a 7% reduction in the normalized root mean square error (NRMSE) and substantially lowers reconstruction artifacts. By minimizing these artifacts and improving overall accuracy, AL-SIM broadens the applicability of SIM for complex biological systems.

Computational ghost holography is a single-pixel imaging technique that has garnered significant attention for its ability to simultaneously acquire both the amplitude and phase images of objects. Typically, single-pixel imaging schemes rely on real-value orthogonal bases, such as Hadamard, Fourier, and wavelet bases. In this Letter, we introduce a novel computational ghost holography scheme with Laguerre–Gaussian (LG) modes as the complex orthogonal basis. It is different from the traditional methods that require the number of imaging pixels to exactly match the number of modulation modes. Our method utilizes 4128 distinct LG modes for illumination. By employing the second-order correlation (SOC) and TVAL3 compressed sensing (CS) algorithms, we have successfully reconstructed the amplitude and phase images of complex objects, and the actual spatial resolution obtained by the experiments is about 70 µm. Due to the symmetry of the LG modes, objects with rotational symmetry can be recognized and imaged using fewer modes. The difference between bucket detection and zero-frequency detection is analyzed theoretically and verified experimentally. Moreover, in the process of object reconstruction, the advanced image processing function can be seamlessly integrated via the preprocessing of the LG modes. As such, it may find a wide range of applications in biomedical diagnostics and target recognition.

In this Letter, we innovatively present general analytical expressions for arbitrary n-step phase-shifting Fourier single-pixel imaging (FSI). We also design experiments capable of implementing arbitrary n-step phase-shifting FSI and compare the experimental results, including the image quality, for 3- to 6-step phase-shifting cases without loss of generality. These results suggest that, compared to the 4-step method, these FSI approaches with a larger number of steps exhibit enhanced robustness against noise while ensuring no increase in data-acquisition time. These approaches provide us with more strategies to perform FSI for different steps, which could offer guidance in balancing the tradeoff between the image quality and the number of steps encountered in the application of FSI.

A large field of view is in high demand for disease diagnosis in clinical applications of optical coherence tomography (OCT) and OCT angiography (OCTA) imaging. Due to limits on the optical scanning range, the scanning speed, or the data processing speed, only a relatively small region could be acquired and processed for most of the current clinical OCT systems at one time and could generate a mosaic image of multiple adjacent small-region images with registration algorithms for disease analysis. In this work, we investigated performing cross-correlation (instead of phase-correlation) in the workflow of the Fourier–Mellin transform (FMT) method (called dual-cross-correlation-based translation and rotation registration, DCCTRR) for calculating translation and orientation offsets and compared its performance to the FMT method used on OCTA images alignment. Both phantom and in vivo experiments were implemented for comparisons, and the results quantitatively demonstrate that DCCTRR can align OCTA images with a lower overlap rate, which could improve the scanning efficiency of large-scale imaging in clinical applications.

We characterize the current crowding effect for microwave radiation on a chip surface based on a quantum wide-field microscope combining a wide-field reconstruction technique. A swept microwave signal with the power of 0–30 dBm is supplied to a dumbbell-shaped microstrip antenna, and the significant differences in microwave magnetic-field amplitudes attributed to the current crowding effect are experimentally observed in a 2.20 mm × 1.22 mm imaging area. The normalized microwave magnetic-field amplitude along the horizontal geometrical center of the image area further demonstrates the feasibility of the characterization of the current crowding effect. The experiments indicate the proposal can be qualified for the characterization of the anomalous area of the radio-frequency chip surface.

The laser-induced damage detection images used in high-power laser facilities have a dark background, few textures with sparse and small-sized damage sites, and slight degradation caused by slight defocus and optical diffraction, which make the image superresolution (SR) reconstruction challenging. We propose a non-blind SR reconstruction method by using an exquisite mixing of high-, intermediate-, and low-frequency information at each stage of pixel reconstruction based on UNet. We simplify the channel attention mechanism and activation function to focus on the useful channels and keep the global information in the features. We pay more attention on the damage area in the loss function of our end-to-end deep neural network. For constructing a high-low resolution image pairs data set, we precisely measure the point spread function (PSF) of a low-resolution imaging system by using a Bernoulli calibration pattern; the influence of different distance and lateral position on PSFs is also considered. A high-resolution camera is used to acquire the ground-truth images, which is used to create a low-resolution image pairs data set by convolving with the measured PSFs. Trained on the data set, our network has achieved better results, which proves the effectiveness of our method.

The array spatial light field is an effective means for improving imaging speed in single-pixel imaging. However, distinguishing the intensity values of each sub-light field in the array spatial light field requires the help of the array detector or the time-consuming deep-learning algorithm. Aiming at this problem, we propose measurable speckle gradation Hadamard single-pixel imaging (MSG-HSI), which makes most of the refresh mechanism of the device generate the Hadamard speckle patterns and the high sampling rate of the bucket detector and is capable of measuring the light intensity fluctuation of the array spatial light field only by a simple bucket detector. The numerical and experimental results indicate that data acquisition in MSG-HSI is 4 times faster than in traditional Hadamard single-pixel imaging. Moreover, imaging quality in MSG-HSI can be further improved by image stitching technology. Our approach may open a new perspective for single-pixel imaging to improve imaging speed.

The 3D location and dipole orientation of light emitters provide essential information in many biological, chemical, and physical systems. Simultaneous acquisition of both information types typically requires pupil engineering for 3D localization and dual-channel polarization splitting for orientation deduction. Here we report a geometric phase helical point spread function for simultaneously estimating the 3D position and dipole orientation of point emitters. It has a compact and simpler optical configuration compared to polarization-splitting techniques and yields achromatic phase modulation in contrast to pupil engineering based on dynamic phase, showing great potential for single-molecule orientation and localization microscopy.

The source’s energy fluctuation has a great effect on the quality of single-pixel imaging (SPI). When the method of complementary detection is introduced into an SPI camera system and the echo signal is corrected with the summation of the light intensities recorded by two complementary detectors, we demonstrate, by both experiments and simulations, that complementary single-pixel imaging (CSPI) is robust to the source’s energy fluctuation. The superiority of the CSPI structure is also discussed in comparison with previous SPI via signal monitoring.

Lens-free on-chip microscopy with RGB LEDs (LFOCM-RGB) provides a portable, cost-effective, and high-throughput imaging tool for resource-limited environments. However, the weak coherence of LEDs limits the high-resolution imaging, and the luminous surfaces of the LED chips on the RGB LED do not overlap, making the coherence-enhanced executions tend to undermine the portable and cost-effective implementation. Here, we propose a specially designed pinhole array to enhance coherence in a portable and cost-effective implementation. It modulates the three-color beams from the RGB LED separately so that the three-color beams effectively overlap on the sample plane while reducing the effective light-emitting area for better spatial coherence. The separate modulation of the spatial coherence allows the temporal coherence to be modulated separately by single spectral filters rather than by expensive triple spectral filters. Based on the pinhole array, the LFOCM-RGB simply and effectively realizes the high-resolution imaging in a portable and cost-effective implementation, offering much flexibility for various applications in resource-limited environments.

It is difficult to extract targets under strong environmental disturbance in practice. Ghost imaging (GI) is an innovative anti-interference imaging technology. In this paper, we propose a scheme for target extraction based on characteristic-enhanced pseudo-thermal GI. Unlike traditional GI which relies on training the detected signals or imaging results, our scheme trains the illuminating light fields using a deep learning network to enhance the target’s characteristic response. The simulation and experimental results prove that our imaging scheme is sufficient to perform single- and multiple-target extraction at low measurements. In addition, the effect of a strong scattering environment is discussed, and the results show that the scattering disturbance hardly affects the target extraction effect. The proposed scheme presents the potential application in target extraction through scattering media.

In this Letter, we propose a pixel-excitation-gating technology for high-sensitivity nonlinear imaging excited by femtosecond pulses at a GHz repetition rate, which can enhance the signal quality of nonlinear imaging. To validate this technology, we apply it to a customized two-photon excitation (TPE) fluorescence microscope that uses a GHz femtosecond pulse fiber laser as the excitation source. Compared with the excitation using a continuous GHz pulse train, up to ∼4-fold enhancement of fluorescence signal can be obtained when the excitation pulse train is gated with a 25% duty cycle of the pixel dwell time, under constant average power. It is anticipated that pixel-level-excitation gating can be an add-on solution for fluorescence signal enhancement in nonlinear imaging systems that are excited by GHz femtosecond pulses. Moreover, the pixel-level excitation control would allow more flexible excitation.

In this work, we demonstrate a single-photon lidar based on a polarization suppression underwater backscatter method. The system adds polarization modules at the transmitter and receiver, which increases the full width at half-maximum of the system response function by about 5 times, improving the signal-to-background ratio, ranging accuracy, and imaging effect. Meanwhile, we optimize a sparsity adaptive matching pursuit algorithm that achieves the reconstruction of target images with a 7.3 attenuation length between the system and the target. The depth resolution of the system under different scattering conditions is studied. This work provides a new method for underwater imaging.

Study on optical correlation function initiates the development of many quantum techniques, with ghost imaging (GI) being one of the great achievements. Upon the first demonstration with entangled sources, the physics and improvements of GI attracted much interest. Among existing studies, GI with classical sources provoked debates and ideas to the most extent. Toward better understanding and practical applications of GI, fundamental theory, various designs of illumination patterns as well as reconstruction algorithms, demonstrations and field tests have been reported, with the topic of GI very much enriched. In this paper, we try to sketch the evolution of GI, focusing mainly on the basic idea, the properties and superiority, progress toward applications of GI with classical sources, and provide our discussion looking into the future.

Motion blur restoration is essential for the imaging of moving objects, especially for single-pixel imaging (SPI), which requires multiple measurements. To reconstruct the image of a moving object with multiple motion modes, we propose a novel motion blur restoration method of SPI using geometric moment patterns. We design a novel localization method that uses normalized differential first-order moments and central moment patterns to determine the object’s translational position and rotation angle information. Then, we perform motion compensation by using shifting Hadamard patterns. Our method effectively improves the detection accuracy of multiple motion modes and enhances the quality of the reconstructed image. We perform simulations and experiments, and the results validate the effectiveness of the proposed method.

Edge detection for low-contrast phase objects cannot be performed directly by the spatial difference of intensity distribution. In this work, an all-optical diffractive neural network (DPENet) based on the differential interference contrast principle to detect the edges of phase objects in an all-optical manner is proposed. Edge information is encoded into an interference light field by dual Wollaston prisms without lenses and light-speed processed by the diffractive neural network to obtain the scale-adjustable edges. Simulation results show that DPENet achieves F-scores of 0.9308 (MNIST) and 0.9352 (NIST) and enables real-time edge detection of biological cells, achieving an F-score of 0.7462.

A concept of divergence angle of light beams (DALB) is proposed to analyze the depth of field (DOF) of a 3D light-field display system. The mathematical model between DOF and DALB is established, and the conclusion that DOF and DALB are inversely proportional is drawn. To reduce DALB and generate clear depth perception, a triple composite aspheric lens structure with a viewing angle of 100° is designed and experimentally demonstrated. The DALB-constrained 3D light-field display system significantly improves the clarity of 3D images and also performs well in imaging at a 3D scene with a DOF over 30 cm.

In this study, we propose an underwater ghost-imaging scheme using a modulation pattern combining offset-position pseudo-Bessel-ring (OPBR) and random binary (RB) speckle pattern illumination. We design the experiments based on modulation rules to order the OPBR speckle patterns. We retrieve ghost images by OPBR beam with different modulation speckle sizes. The obtained ghost images have a better contrast-to-noise rate compared to RB beam ghost imaging under the same conditions. We verify the results both in the experiment and simulation. In addition, we also check the image quality at different turbidities. Furthermore, we demonstrate that the OPBR speckle pattern also provides better image quality in other objects. The proposed method promises wide applications in highly scattering media, atmosphere, turbid water, etc.

Hadamard single-pixel imaging is an appealing imaging technique due to its features of low hardware complexity and industrial cost. To improve imaging efficiency, many studies have focused on sorting Hadamard patterns to obtain reliable reconstructed images with very few samples. In this study, we propose an efficient Hadamard basis sampling strategy that employs an exponential probability function to sample Hadamard patterns in a direction with high energy concentration of the Hadamard spectrum. We used the compressed-sensing algorithm for image reconstruction. The simulation and experimental results show that this sampling strategy can reconstruct object reliably and preserves the edge and details of images.

Non-line-of-sight (NLOS) imaging is an emerging technique for detecting objects behind obstacles or around corners. Recent studies on passive NLOS mainly focus on steady-state measurement and reconstruction methods, which show limitations in recognition of moving targets. To the best of our knowledge, we propose a novel event-based passive NLOS imaging method. We acquire asynchronous event-based data of the diffusion spot on the relay surface, which contains detailed dynamic information of the NLOS target, and efficiently ease the degradation caused by target movement. In addition, we demonstrate the event-based cues based on the derivation of an event-NLOS forward model. Furthermore, we propose the first event-based NLOS imaging data set, EM-NLOS, and the movement feature is extracted by time-surface representation. We compare the reconstructions through event-based data with frame-based data. The event-based method performs well on peak signal-to-noise ratio and learned perceptual image patch similarity, which is 20% and 10% better than the frame-based method.

We simulate the measurements of an active bifocal terahertz imaging system to reproduce the ability of the system to detect the internal structure of foams having embedded defects. Angular spectrum theory and geometric optics tracing are used to calculate the incident and received electric fields of the system and the scattered light distribution of the measured object. The finite-element method is also used to calculate the scattering light distribution of the measured object for comparison with the geometric optics model. The simulations are consistent with the measurements at the central axis of the horizontal stripe defects.

Photoelectron spectroscopy is a powerful tool in characterizing the electronic structure of materials. To investigate the specific region of interest with high probing efficiency, in this work we propose a compact in situ microscope to assist photoelectron spectroscopy. The configuration of long objective distance of 200 mm with two-mirror reflection has been introduced. Large magnification of 5× to 100×, lateral resolution of 4.08 µm, and longitudinal resolution of 4.49 µm have been achieved. Meanwhile, the testing result shows larger focal depth of this in situ optical microscope. Similar configurations could also be applied to other electronic microscopes to improve their probing capability.

Multi-channel detection is an effective way to improve data throughput of spectral-domain optical coherence tomography (SDOCT). However, current multi-channel OCT requires multiple detectors, which increases the complexity and cost of the system. We propose a novel multi-channel detection design based on a single spectrometer. Each camera pixel receives interferometric spectral signals from all the channels but with a spectral shift between two channels. This design effectively broadens the spectral bandwidth of each pixel, which reduces relative intensity noise (RIN) by √M times with M being the number of channels. We theoretically analyzed the noise of the proposed design under two cases: shot-noise limited and electrical noise or RIN limited. We show both theoretically and experimentally that this design can effectively improve the sensitivity, especially for electrical noise or RIN-dominated systems.

Polarized hyperspectral imaging, which has been widely studied worldwide, can obtain four-dimensional data including polarization, spectral, and spatial domains. To simplify data acquisition, compressive sensing theory is utilized in each domain. The polarization information represented by the four Stokes parameters currently requires at least two compressions. This work achieves full-Stokes single compression by introducing deep learning reconstruction. The four Stokes parameters are modulated by a quarter-wave plate (QWP) and a liquid crystal tunable filter (LCTF) and then compressed into a single light intensity detected by a complementary metal oxide semiconductor (CMOS). Data processing involves model training and polarization reconstruction. The reconstruction model is trained by feeding the known Stokes parameters and their single compressions into a deep learning framework. Unknown Stokes parameters can be reconstructed from a single compression using the trained model. Benefiting from the acquisition simplicity and reconstruction efficiency, this work well facilitates the development and application of polarized hyperspectral imaging.

For speckle-correlation-based scattering imaging, an iris is generally used next to the diffuser to magnify the speckle size and enhance the speckle contrast, which limits the light flux and makes the setup cooperative. Here, we experimentally demonstrate a non-iris speckle-correlation imaging method associated with an image resizing process. The experimental results demonstrate that, by estimating an appropriate resizing factor, our method can achieve high-fidelity noncooperative speckle-correlation imaging by digital resizing of the raw captions or on-chip pixel binning without iris. The method opens a new door for noncooperative high-frame-rate speckle-correlation imaging and benefits scattering imaging for dynamic objects hidden behind opaque barriers.

Realizing high-fidelity optical information transmission through a scattering medium is of vital importance in both science and applications, such as short-range fiber communication and optical encryption. Theoretically, an input wavefront can be reconstructed by inverting the transmission matrix of the scattering medium. However, this deterministic method for retrieving light field information encoded in the wavefront has not yet been experimentally demonstrated. Herein, we demonstrate light field information transmission through different scattering media with near-unity fidelity. Multi-dimensional optical information can be delivered through either a multimode fiber or a ground glass without relying on any averaging or approximation, where their Pearson correlation coefficients can be up to 99%.

This paper presents an improved method for imaging in turbid water by using the individual strengths of the quadrature lock-in discrimination (QLD) method and the retinex method. At first, the high-speed QLD is performed on images, aiming at capturing the ballistic photons. Then, we perform the retinex image enhancement on the QLD-processed images to enhance the contrast of the image. Next, the effect of uneven illumination is suppressed by using the bilateral gamma function for adaptive illumination correction. The experimental results depict that the proposed approach achieves better enhancement than the existing approaches, even in a high-turbidity environment.

In this Letter, we present a low-cost, high-resolution spectrometer design for ultra-high resolution optical coherence tomography (UHR-OCT), in which multiple standard achromatic lenses are combined to replace the expensive F-theta lens to achieve a comparable performance. For UHR-OCT, the spectrometer plays an important role in high-quality 3D image reconstruction. Typically, an F-theta lens is used in spectrometers as the Fourier lens to focus the dispersed light on the sensor array, and this F-theta lens is one of the most expensive components in spectrometers. The advantage of F-theta lens over the most widely used achromatic lens is that the aberrations (mainly spherical aberration, SA) are corrected, so the foci of the dispersed optical beams (at different wavelengths) with different incident angles could be placed on the sensor array simultaneously. For the achromatic lens, the foci of the center part of the spectrum are farther than those on the side in the longitudinal direction, causing degradations of the spectral resolution. Furthermore, in comparison with the achromatic lens with the same focal length, those with smaller diameters have stronger SA, but small lenses are what we need for making spectrometers compact and stable. In this work, we propose a simple method of using multiple long-focal-length achromatic lenses together to replace the F-theta lens, which is >8-fold cheaper based on the price of optical components from Thorlabs, US. Both simulations and in vivo experiments were implemented to demonstrate the performance of the proposed method.

Single-pixel imaging can reconstruct the image of the object when the light traveling from the object to the detector is scattered or distorted. Most single-pixel imaging methods only obtain distribution of transmittance or reflectivity of the object. Some methods can obtain extra information, such as color and polarization information. However, there is no method that can get the vibration information when the object is vibrating during the measurement. Vibration information is very important, because unexpected vibration often means the occurrence of abnormal conditions. In this Letter, we introduce a method to obtain vibration information with the frequency modulation single-pixel imaging method. This method uses a light source with a special pattern to illuminate the object and analyzes the frequency of the total light intensity signal transmitted or reflected by the object. Compared to other single-pixel imaging methods, frequency modulation single-pixel imaging can obtain vibration information and maintain high signal-to-noise ratio and has potential on finding out hidden facilities under construction or instruments in work.

To date, fluorescence imaging systems have all relied on at least one beam splitter (BS) to ensure the separation of excitation light and fluorescence. Here, we reported SiO2/TiO2 multi-layer long pass filter integrated GaN LED. It is considered as the potential source for imaging systems. Experimental results indicate that the GaN LED shows blue emission peaked at 470.3 nm and can be used to excite dye materials. Integrating with a long pass filter (550 nm), the light source can be used to establish a real-time fluorescence detection for dyes that emit light above 550 nm. More interestingly, with this source, a real-time imaging system with signature words written with the dyes, such as ‘N J U P T’, can be converted into CCD images. This work may lead to a new strategy for integrating light sources and BS mirrors to build mini and smart fluorescence imaging systems.

Snapshot spectral ghost imaging, which can acquire dynamic spectral imaging information in the field of view, has attracted increasing attention in recent years. Studies have shown that optimizing the fluctuation of light fields is essential for improving the sampling efficiency and reconstruction quality of ghost imaging. However, the optimization of broadband light fields in snapshot spectral ghost imaging is challenging because of the dispersion of the modulation device. In this study, by judiciously introducing a hybrid refraction/diffraction structure into the light-field modulation, snapshot spectral ghost imaging with broadband super-Rayleigh speckles was demonstrated. The simulation and experiment results verified that the contrast of speckles in a broad range of wavelengths was significantly improved, and the imaging system had superior noise immunity.

A real-time imaging system based on a compact terahertz laser is constructed by employing one off-axis parabolic mirror and one silicon lens. Terahertz imaging of water, water stains, leaf veins, human hairs, and metal wire is demonstrated. An imaging resolution of 68 µm is achieved. The experiments show that this compact and simplified imaging system is suitable for penetration demonstration of terahertz light, water distribution measurement, and imaging analysis of thin samples.

Multiple object tracking (MOT) in unmanned aerial vehicle (UAV) videos has attracted attention. Because of the observation perspectives of UAV, the object scale changes dramatically and is relatively small. Besides, most MOT algorithms in UAV videos cannot achieve real-time due to the tracking-by-detection paradigm. We propose a feature-aligned attention network (FAANet). It mainly consists of a channel and spatial attention module and a feature-aligned aggregation module. We also improve the real-time performance using the joint-detection-embedding paradigm and structural re-parameterization technique. We validate the effectiveness with extensive experiments on UAV detection and tracking benchmark, achieving new state-of-the-art 44.0 MOTA, 64.6 IDF1 with 38.24 frames per second running speed on a single 1080Ti graphics processing unit.

In-situ laser-induced surface damage inspection plays a key role in protecting the large aperture optics in an inertial confinement fusion (ICF) high-power laser facility. In order to improve the initial damage detection capabilities, an in-situ inspection method based on image super-resolution and adaptive segmentation method is presented. Through transfer learning and integration of various attention mechanisms, the super-resolution reconstruction of darkfield images with less texture information is effectively realized, and, on the basis of image super-resolution, an adaptive image segmentation method is designed, which effectively adapts to the damage detection problems under conditions of uneven illumination and weak signal. An online experiment was carried out by using edge illumination and the telescope optical imaging system, and the validity of the method was proved by the experimental results.

High-speed target three-dimensional (3D) trajectory and velocity measurement methods have important uses in many fields, including explosive debris and rotating specimen trajectory tracking. The conventional approach uses a binocular system with two high-speed cameras to capture the target’s 3D motion information. Hardware cost for the conventional approach is high, and accurately triggering several high-speed cameras is difficult. Event-based cameras have recently received considerable attention due to advantages in dynamic range, temporal resolution, and power consumption. To address problems of camera synchronization difficulties, data redundancy, and motion blur in high-speed target 3D trajectory measurement, this Letter proposes a 3D trajectory measurement method based on a single-event camera and a four-mirror adaptor. The 3D trajectory and velocity of a particle flight process and a marker on a rotating disc were measured with the proposed method, and the results show that the proposed method can monitor the operational state of high-speed flying and rotating objects at a very low hardware cost.

Light field imaging has shown significance in research fields for its high-temporal-resolution 3D imaging ability. However, in scenes of light field imaging through scattering, such as biological imaging in vivo and imaging in fog, the quality of 3D reconstruction will be severely reduced due to the scattering of the light field information. In this paper, we propose a deep learning-based method of scattering removal of light field imaging. In this method, a neural network, trained by simulation samples that are generated by light field imaging forward models with and without scattering, is utilized to remove the effect of scattering on light fields captured experimentally. With the deblurred light field and the scattering-free forward model, 3D reconstruction with high resolution and high contrast can be realized. We demonstrate the proposed method by using it to realize high-quality 3D reconstruction through a single scattering layer experimentally.

Broadband super-resolution imaging is important in the optical field. To achieve super-resolution imaging, various lenses from a superlens to a solid immersion lens have been designed and fabricated in recent years. However, the imaging is unsatisfactory due to low work efficiency and narrow band. In this work, we propose a solid immersion square Maxwell’s fish-eye lens, which realizes broadband (7–16 GHz) achromatic super-resolution imaging with full width at half-maximum around 0.2λ based on transformation optics at microwave frequencies. In addition, a super-resolution information transmission channel is also designed to realize long-distance multi-source super-resolution information transmission based on the super-resolution lens. With the development of 3D printing technology, the solid immersion Maxwell’s fish-eye lens is expected to be fabricated in the high-frequency band.

In this Letter, we present B-scan-sectioned dynamic micro-optical coherence tomography (BD-MOCT) for high-quality sub-cellular dynamic contrast imaging. Dynamic micro-optical coherence tomography (D-MOCT) is a functional optical coherence tomography (OCT) technique performed on high-resolution (micron level) OCT systems; hundreds of consecutive B-scans need to be acquired for dynamic signal extraction, which requires relatively long data acquisition time. Bulk motions occurring during data acquisition (even at the micron level) may degrade the quality of the obtained dynamic contrast images. In BD-MOCT, each full B-scan is divided into several sub-B-scans, and each sub-B-scan repeats multiple times before the sample beam moves to the next sub-B-scan. After all of the sub-B-scans for a full B-scan are completely acquired, we stitch all of the sub-B-scans into the same number of full B-scans. In this way, the time interval between two consecutive stitched B-scans could be reduced multiple times for bulk-motion suppression. The performed scanning protocol modulates the scanning sequences of fast scanning and repeat scanning for improving the dynamic contrast image quality, while the total data acquisition time remains almost the same.

Bragg processing using a volume hologram offers an alternative in optical image processing in contrast to Fourier-plane processing. By placing a volume hologram near the object in an optical imaging setup, we achieve Bragg processing. In this review, we discuss various image processing methods achievable with acousto-optic modulators as dynamic and programmable volume holograms. In particular, we concentrate on the discussion of various differentiation operations leading to edge extraction capabilities.

In the integral imaging light field display, the introduction of a diffractive optical element (DOE) can solve the problem of limited depth of field of the traditional lens. However, the strong aberration of the DOE significantly reduces the final display quality. Thus, herein, an end-to-end joint optimization method for optimizing DOE and aberration correction is proposed. The DOE model is established using thickness as the variable, and a deep learning network is built to preprocess the composite image loaded on the display panel. The simulation results show that the peak signal to noise ratio value of the optimized image increases by 8 dB, which confirms that the end-to-end joint optimization method can effectively reduce the aberration problem.

We propose a new experimentally verified ghost imaging (GI) mechanism, derivative GI. Our innovation is that we use the derivatives of the intensities of the test light and the reference light for imaging. Experimental results show that by combining derivative GI with the standard GI algorithm, multiple independent signals can be obtained in one measurement. This combination greatly reduces the number of measurements and the time required for data acquisition and imaging. Derivative GI intrinsically does not produce the storage-consuming background term of GI, so it is suitable for on-chip implementation and makes practical application of GI easier.

The multimode fiber (MMF) has great potential to transmit high-resolution images with less invasive methods in endoscopy due to its large number of spatial modes and small core diameter. However, spatial modes crosstalk will inevitably occur in MMFs, which makes the received images become speckles. A conditional generative adversarial network (GAN) composed of a generator and a discriminator was utilized to reconstruct the received speckles. We conduct an MMF imaging experimental system of transmitting over 1 m MMF with a 50 μm core. Compared with the conventional method of U-net, this conditional GAN could reconstruct images with fewer training datasets to achieve the same performance and shows higher feature extraction capability.

The anisotropy of thermal property in an Yb,Nd:Sc2SiO5 crystal is investigated from the temperature of 293 to 573 K. Based on the systematical study of thermal expansion, thermal diffusivity, and specific heat, the thermal conductivity in Yb,Nd:Sc2SiO5 crystals orientated at (100), (010), (001), and (406) is calculated to be 3.46, 2.60, 3.35, and 3.68 W/(m·K), respectively. The laser output anisotropy of a continuous-wave (CW) and tunable laser is characterized, accordingly. A maximum output power of 6.09 W is achieved in the Yb,Nd:Sc2SiO5 crystal with (010) direction, corresponding to a slope efficiency of 48.56%. The tuning wavelength range in the Yb,Nd:Sc2SiO5 crystal orientated at (100), (010), and (001) is 68, 67, and 65 nm, separately. The effects of thermal properties on CW laser performance are discussed.

Applications of ghost imaging are limited by the requirement on a large number of samplings. Based on the observation that the edge area contains more information thus requiring a larger number of samplings, we propose a feedback ghost imaging strategy to reduce the number of required samplings. The field of view is gradually concentrated onto the edge area, with the size of illumination speckles getting smaller. Experimentally, images of high quality and resolution are successfully reconstructed with much fewer samplings and linear algorithm.

An enhancement method of rapid lifetime determination is proposed for time-resolved fluorescence imaging to discriminate substances with approximate fluorescence lifetime in forensic examination. In the method, an image-exclusive-OR treatment with filter threshold adaptively chosen is presented to extract the region of interest from dual-gated fluorescence intensity images, and then the fluorescence lifetime image is reconstructed based on the rapid lifetime determination algorithm. Furthermore, a maximum and minimum threshold filtering is developed to automatically realize visualization enhancement of the lifetime image. In proof experiments, compared with traditional fluorescence intensity imaging and rapid lifetime determination method, the proposed method automatically distinguishes altered and obliterated documents written by two brands of highlighters with the same color and close fluorescence lifetime.

This paper reports a detection method of two-dimensional (2D) enhancement and three-dimensional (3D) reconstruction for subtle traces with reflectance transformation imaging, which can effectively locate the trace area of interest and extract the normal data of this area directly. In millimeter- and micron-scale traces, during 3D construction, we presented a method of data screening, conversion, and amplification, which can successfully suppress noise, improve surface and edge quality, and enhance 3D effect and contrast. The method not only captures 2D and 3D morphologies of traces clearly but also obtains the sizes of these traces.

We propose a color ghost imaging approach where the object is illuminated by three-color non-orthogonal random patterns. The object’s reflection/transmission information is received by only one single-pixel detector, and both the sparsity constraint and non-local self-similarity of the object are utilized in the image reconstruction process. Numerical simulation results demonstrate that the imaging quality can be obviously enhanced by ghost imaging via sparsity constraint and non-local self-similarity (GISCNL), compared with the reconstruction methods where only the object’s sparsity is used. Factors affecting the quality of GISCNL, such as the measurement number and the detection signal-to-noise ratio, are also studied.

To balance the accuracy and efficiency in multiple-view triangulation with sequential images, a high-efficiency propagation-based incremental triangulation (INT) method, carving three-dimensional (3D) scene points by updating the incoming feature track one by one without iterations, is proposed. Based on the INT method, a more accurate iteration-limited INT method is also established with few iterations to bound the propagated errors, ensuring the accuracy of subsequent 3D reconstruction. Finally, experimental results demonstrate that the proposed methods can balance the efficiency and accuracy in different multiple-view INT situations.

Typical single-pixel imaging techniques for edge detection are mostly based on first-order differential edge detection operators. In this paper, we present a novel edge detection scheme combining Fourier single-pixel imaging with a second-order Laplacian of Gaussian (LoG) operator. This method utilizes the convolution results of an LoG operator and Fourier basis patterns as the modulated patterns to extract the edge detail of an unknown object without imaging it. The simulation and experimental results demonstrate that our scheme can ensure finer edge detail, especially under a noisy environment, and save half the processing time when compared with a traditional first-order Sobel operator.

This paper presents a polarization descattering imaging method for underwater detection in which the targets have nonuniform polarization characteristics. The core of this method takes the nonuniform distribution of the polarization information of the target-reflected light into account and expands the application field of underwater polarization imaging. Independent component analysis was used to separate the target light and backscattered light. Theoretical analysis and proof-of-concept experiments were employed to demonstrate the effectiveness of the proposed method in estimating target information. The proposed method showed superiority in accurately estimating the target information compared with other polarization imaging methods.

Computational ghost imaging (CGI) has recently been intensively studied as an indirect imaging technique. However, the image quality of CGI cannot meet the requirements of practical applications. Here, we propose a novel CGI scheme to significantly improve the imaging quality. In our scenario, the conventional CGI data processing algorithm is optimized to a new compressed sensing (CS) algorithm based on a convolutional neural network (CNN). CS is used to process the data collected by a conventional CGI device. Then, the processed data are trained by a CNN to reconstruct the image. The experimental results show that our scheme can produce higher quality images with the same sampling than conventional CGI. Moreover, detailed comparisons between the images reconstructed using the deep learning approach and with conventional CS show that our method outperforms the conventional approach and achieves a ghost image with higher image quality.

A novel scheme of photonic aided vector millimeter-wave (mm-wave) signal generation without a digital-to-analog converter (DAC) is proposed. Based on our scheme, a 20 Gb/s 4-ary quadrature amplitude modulation (4-QAM) mm-wave signal is generated without using a DAC. The experiment results demonstrate that the bit error rate (BER) of 20 Gb/s 4-QAM mm-wave signal can reach below the hard-decision forward-error-correction threshold after a delivery over 1 m wireless distance. Because the DAC is not required, it can reduce the system cost. Besides, by using photonic technology, the system is easily integrated to create large-scale production and application in high-speed optical communication.